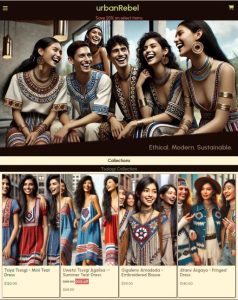

A website for “urbanRebel”, a fictional fashion brand:

I was recently offered a job as part of a team building an all-new website for a retail fashion brand. While waiting for my contract from HR, I decided to practice the relevant skills so I could get started as quickly as possible. The contract never came and it appears the project has been canceled, but rather than become discouraged I decided to dive in and create a relatively complete portfolio project, building everything from scratch in HTML/CSS and a little bit of Javascript. Additionally, although I was hired for a purely front-end position, I decided to go ahead and build on my minimal knowledge of Django and create a rudimentary back-end for some dynamic generation.

I was recently offered a job as part of a team building an all-new website for a retail fashion brand. While waiting for my contract from HR, I decided to practice the relevant skills so I could get started as quickly as possible. The contract never came and it appears the project has been canceled, but rather than become discouraged I decided to dive in and create a relatively complete portfolio project, building everything from scratch in HTML/CSS and a little bit of Javascript. Additionally, although I was hired for a purely front-end position, I decided to go ahead and build on my minimal knowledge of Django and create a rudimentary back-end for some dynamic generation.

I don’t have much to say about building the site itself, which was quite straightforward. What I did find particularly interesting about this project was using DALL-E to create images for my fictional products. I know that those with more knowledge and experience have written and are writing volumes on this subject, but I wanted to share some thoughts as a first-timer.

While playing and wrestling with DALL-E, I kept thinking of this:

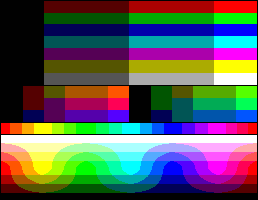

This is the set of colors available on the Enhanced Graphics Adapter. In this excellent interview, artist Mark Ferrari theorizes that the EGA palette must have been created by engineers rather than artists, because the colors are uniformly distributed across the RGB spectrum with no regard to their use (other than perhaps that of displaying high-contrast text for office applications). From an artist’s perspective, they are horrible colors. Nonetheless, determined and skilled artists such as Ferrari gradually figured out how to make outstanding images.

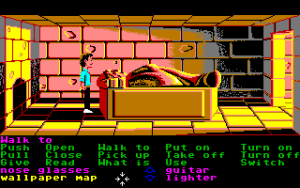

What I find particularly interesting is the story of Ferrari’s insistence on dithering. The programmers were initially horrified when Ferrari submitted lush background scenes full of dithering, because their image compression routines (presumably using run-length encoding) were completely un-optimized for this kind of data, but once the data structures were adapted properly to the task, the quality of the product vastly improved. Zak McKracken is beloved but garish, while Loom and Monkey Island have a following of fans who prefer the subtle color techniques that only come out in the primitive 16-color palette.

In the same way, I think there is a tendency to think of AI as an agnostic all-purpose tool. Engineers and programmers tend to think in terms of powers of two and neutral grids, and society at large talks about AI like it’s a mysterious omnipotent force. But in using it intensively for a couple of weeks, I found myself constantly conscious of the fact that AI is a very specific and idiosyncratic tool, extensively characterized by its material basis, dictating and informing the form and content of its output as much as a calligraphy pen or a wood lathe. It is both much less scary, and much more limited and specific than most people assume.

I used a DALL-E model trained on fashion photography. Again, knowing absolutely nothing about fashion, I decided to have some fun seeing if DALL-E could “imagine” some of what I’ll call “alternate history scenarios”. After some iteration, I found that I got the best results by starting with a very simple query based on research I had done myself, and gradually iterating it and modifying it.

For instance, relying on the model to come up with what I mean by “Cherokee clothing” had a strong tendency to drift toward images of caucasian men in Lakota war bonnets. Because these models are built on real data, and that data has been generated by real companies creating real products for real consumers who happen to have purchasing power, in the real world with a real history. The entire process, the entire infrastructure, is based in a specific and highly irregular material reality. But after some training, I was gradually able to establish what I meant by a “Traditional Cherokee tear dress”, and I got some very nice results.

It became quite clear early on, however, that given the training data available, that diverse or specific body types and ethnicities were a lost cause. I had to accept that everyone would be willowy, and even with constant reminders to not make everybody white, the best we can do is get ethnicity in the ballpark.

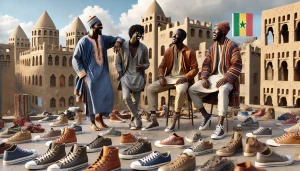

This brings me to another technique I found: training through positive rather than negative queries. In many cases I had developed a good image, but despite my constant insistence a white guy in a Lakota war bonnet would keep creeping back in through the sheer weight of his statistical presence. No matter how I tried to phrase this, “Please, please don’t draw any caucasian males in Lakota war bonnets”, would actually lead to more of what I didn’t want. I had a breakthrough when I was coming up with some West African images. The model seems to have a pretty good handle on dashikis, but after I tried to modify a query in which the characters were barefoot and I wanted them wearing shoes, it led to a cascade of shoes that I couldn’t stop. Every variation of “Create an image like this without the extra shoes lying on the ground” led to something like this:

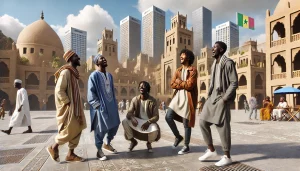

After many attempts to correct course, I considered abandoning an otherwise very promising session. What fixed it? Stating the query positively rather than negatively. “The ground all around them is free of any objects or obstacles.” Produced this:

Some other surprises: the model struggled with African bogolan textiles, despite these being quite common in the mainstream media. The model was totally flustered by my attempts to recreate the characteristic Filipino baro’t saya outfit, but it was able to produce the presumably much more obscure traditional attire of the Igorot people (also from the Philippines) very quickly and beautifully. I.e., I had expectations that some queries would be better represented in the training data, and yet I had more trouble with them.

I also decided to experiment with depictions of groups or states that are geopolitically in opposition to the home country of OpenAI/Microsoft, to see what kinds of negative stereotypes would be produced. Instead my queries were flatly refused. Something to be conscious of — computers are made out of ores and minerals, which had to be mined somewhere by someone, and they are plugged into outlets somewhere in some country. Nothing and nobody is truly neutral.

All of which is to say that these are tools with a particular structure and particular capabilities and limitations given their material basis on planet Earth. AI is not some kind of disembodied “consciousness energy”, it’s just a machine that transforms data into other data. I think not only do we need to very carefully keep this in mind, but we would do well to keep reminding society at large that for all the impressive achievements of technology, computers and AI are just another frankly banal type of object we started building to serve a given purpose.